Quick Thoughts on Rationality

I hope to get researchers to take a look at this piece!

“Let’s hope that we can achieve rationality before irrationality kills us—it’s not too late.”

We live in terrifyingly irrational times—one friend wrote this to me:

We should surely try to combat irrationality, and it can sometimes work. But the current drift of Republicans, and some of the left, into irrational hysteria makes it as hard as it has been with religious extremists.

My friend’s comment is terrifying—I don’t know any way at all to make progress with religious extremists, so if the current drift into “irrational hysteria” really has rendered progress “as hard as it has been with religious extremists” then I fear for society’s future.

But I’m pretty bad at talking to people about their beliefs, so maybe people who are actually good at this sort of thing have actually found a way to make progress with religious extremists, in which case I’d be excited to learn from that progress.

Throughout this piece, I’ll use the shorthand “RD” to refer to a “rational discussion with someone about one or more specific political issues”.

This piece will lay out my quick thoughts about rationality—first I’ll address journalism’s collapse, and then I’ll address these four bedrock things that might be important to discuss before attempting an RD:

that truth exists

that we can learn something about the larger world through science

that people should investigate what they spread

that skepticism should be universal

On the four bedrock things:

you might not want to assume that the basic bedrock is there until you’ve actually asked them about the basic bedrock

you might want to know going into your attempt at an RD what their views are on these things

it might be regrettable to discover an hour into your attempt at an RD—or 100 emails into your attempt at an RD—that they aren’t 100% on board with one or more of these things

it might create a wonderful psychological vibe if you can establish some solid common values before discussing stuff that the two of you might disagree about

if they don’t accept one or more of the things, you obviously shouldn’t scoff at them or write them off as too irrational to talk to, but it might be useful to know in advance whether the two of you are on the same page with these things

I plan to interview various researchers who are experts on rationality—during those interviews, I’ll link to this piece and ask the researchers to comment on this piece.

And I hope that researchers who comment on this piece will weigh in on these two questions:

To what extent do people accept the four bedrock things?

What can be done to increase people’s commitment to the four bedrock things?

I’m excited to get answers regarding those two questions and I’m excited to see what researchers say about this piece.

Journalism’s Collapse

I think that journalism’s collapse is a central reason for the “current drift” into “irrational hysteria”—see my two pieces about the solution to our journalism crisis:

“Can We Fix Journalism?” (13 October 2021)

“Is This the Most Exciting Idea Out There?” (29 October 2021)

As Robert W. McChesney says in the 13 October piece:

Local journalism has historically been the heart and the crucial part of US journalism. And that’s the part where the collapse is the worst today—there’s very, very little journalism left at the local level in the US. Regrettably, the effects are self-evident—even if people want to get involved locally and understand what’s going on in their communities, it’s really hard to do because there just aren’t enough resources there to make it possible. And so we have an information climate that’s ideal for propaganda, and rogues and demagogues, and all sorts of bullshit—that’s what we’re living through right now.

But I want to draw particular attention to the emotional situation where people simply can’t hear what people are saying—this is a terrifying phenomenon. As Noam Chomsky put it:

The sign of a truly totalitarian culture is that important truths simply lack cognitive meaning and are interpretable only at the level of ‘Fuck You’, so they can then elicit a perfectly predictable torrent of abuse in response.

Take a look at this video:

A lot of right-wingers in the US today wouldn’t be able to hear what this video says and would regard this video as a textbook example of the liberal media cherry-picking things in order to make Trump and the GOP look bad or evil or dangerous.

But one of my friends was surprised that this video could be regarded as a textbook example of the liberal media at its absolute worst:

You must have sent the wrong link. I watched it. A very sober and serious report on the very ominous proto-fascism that is sweeping the country, quietly presenting the incredible racist lies of the disgusting figure who’s fanning the flames and disgraceful cowards like Hawley and Pence. Could only disturb those who are unsatisfied with proto-fascism and want to go the whole way.

So you have a situation where certain things can’t be heard—for example, you won’t usually witness right-wingers saying the following in response to the Haiti comment that the video discusses:

“Yes, that statement was racist, and that’s really messed up, and the GOP needs to fix that.”

“Yes, that statement was a lie, and it’s wrong to lie, and the GOP needs to fix that.”

Why don’t right-wingers just say these simple things in response? I assume that it’s because they hate CNN and Jim Acosta so much that the actual content is unhearable and is heard only as “Fuck You”.

Could right-wingers hear the exact same content if the content came from a source that they actually trusted and actually respected? I hope so—that’s one reason why it’s important to realize McChesney’s vision of a healthy journalism:

And the nice thing about a healthy journalism is that you have a multitude of different tones and a genuine garden of different viewpoints and approaches.

So the hope is that a “multitude of different tones”—and a “genuine garden of different viewpoints and approaches”—would allow right-wingers to hear things that they currently can’t hear.

Find Out if They Think That Truth Exists

An RD seems to require that participants accept that truth exists.

So it might be important to have the deeper conversation about whether truth exists—and about whether it’s possible to be mistaken about something—before your attempt at an RD.

On the issue of whether truth exists, Timothy Snyder wrote this in his 2017 book On Tyranny:

To abandon facts is to abandon freedom. If nothing is true, then no one can criticize power, because there is no basis upon which to do so. If nothing is true, then all is spectacle. The biggest wallet pays for the most blinding lights.

So arguably, eliminating the concept of truth undermines freedom as well as the ability to inquire about the world alongside other human beings.

Find Out if They Think That We Can Learn Something About the Larger World Through Science

And RDs also seem to require—whenever science is involved in any way—that participants accept:

(1) that there’s a larger world out there that lies beyond what we ourselves have experienced

(2) that we can learn about this larger world through science

So you might want to find out if you’re on the same page regarding (1) and (2) before attempting an RD that involves science in any way.

There’s a striking solipsism these days where people (A) think that their experiences necessarily reflect the larger world and (B) dismiss scientific investigation into the larger world insofar as that investigation goes against their experiences.

Johnny Harris made an important video about people who believe that Earth is flat—according to Harris, these people subscribe to a “paradigm that mistrusts anything that you can’t touch and feel”.

And this same “paradigm” shows up when people invoke—during discussions about the larger world—their stories about people they know who:

got infected with Covid and found Covid to be no big deal

got vaccinated for Covid and experienced horrible side effects

had a great experience with a certain treatment for some condition

had a terrible experience with a certain treatment for some condition

And social collapse might be responsible for the terrifying opposition to science that you see these days—one of my friends told me that how things are going socially affects how much citizens value the scientific enterprise:

It’s a general problem in the US. Many factors. Some are deeply rooted in the society. In more recent years, the neoliberal assault launched by Reagan and joined by others has led to a considerable degree of social collapse, visible elsewhere too but quite extreme in the US. The shift of the Republicans from a normal political party to a radical insurgency that rejects parliamentary politics and retains its ability to serve the super-rich and the corporate sector by inflaming culture wars, including hatred of science, has played an important role. The end result is that scientific communication that leads to rational responses in many other countries can quickly be converted here into wild conspiracies. Not just anti-vax and climate denial, but also cults of a kind one rarely finds elsewhere, like the belief (over half of Trump voters) that the government is run by a satanic cult of pedophiles who torture children (including Biden, Clinton,…).

But I should add the caveat that it might be self-destructive to tell people about scientific investigation and scientific data—I wrote a piece about this bleak possibility:

“What’s the EFFECTIVE Way to Discuss Climate?” (3 July 2021)

It’s hard to imagine that it might be a bad idea to invoke scientific investigation and scientific data, but it’s crucial to adopt a strategy that works.

Find Out if They Think That People Should Investigate What They Spread

Many people apparently don’t see themselves as responsible for the information and thoughts and ideas that they transmit to others—there seems to be a common attitude that it’s OK to spread whatever content one encounters and then leave it up to others to investigate whether that content holds up.

So before you attempt an RD, it might be important to find out if the person adopts this attitude.

In my view, this attitude is:

(1) lazy

(2) irresponsible

(3) immoral

(4) dangerous

Joe Rogan is a major example of this attitude—in general, he doesn’t investigate what his guests say and merely serves as an uncritical conduit for whatever his guests happen to be putting out there:

I don’t mean to suggest that it’s always easy to investigate what you spread—it’s a difficult thing to do.

I’ve been on Substack for almost a year now, and I’ve had a lot of anxiety about certain things that I’ve published—that said, here are three reasons why I don’t feel too bad about my own track record on vetting things:

I’m a lone Substacker and I don’t—currently, at least!—have the resources of an established person

the fact that I have anxiety about this at least demonstrates that I care about getting things correct

I’d immediately make a correction if anyone ever pointed out something that I’d gotten wrong

In his 2017 book, Timothy Snyder wrote the following about one’s responsibility to investigate what one transmits—this applies across the board, not just to the stuff that we post on the internet:

“If the main pillar of the system is living a lie,” wrote Havel, “then it is not surprising that the fundamental threat to it is living in truth.” Since in the age of the internet we are all publishers, each of us bears some private responsibility for the public’s sense of truth. If we are serious about seeking the facts, we can each make a small revolution in the way the internet works. If you are verifying information for yourself, you will not send on fake news to others.…

We do not see the minds that we hurt when we publish falsehoods, but that does not mean we do no harm. Think of driving a car. We may not see the other driver, but we know not to run into their car. We know that the damage will be mutual. We protect the other person without seeing him, dozens of times every day. Likewise, although we may not see the other person in front of his or her computer, we have our share of responsibility for what is on the screen. If we can avoid doing violence to the minds of unseen others on the internet, others will learn to do the same. And then perhaps our internet traffic will cease to look like one great, bloody accident.

And Snyder wrote this as well in his 2017 book:

Take responsibility for what you communicate with others.

And this responsibility increases when you’re talking about matters of life and death like Covid or global heating—the graver the consequences of your being wrong, the greater your responsibility to investigate.

Find Out if They Think That Skepticism Should Be Universal

There’s a ubiquitous phenomenon where people will:

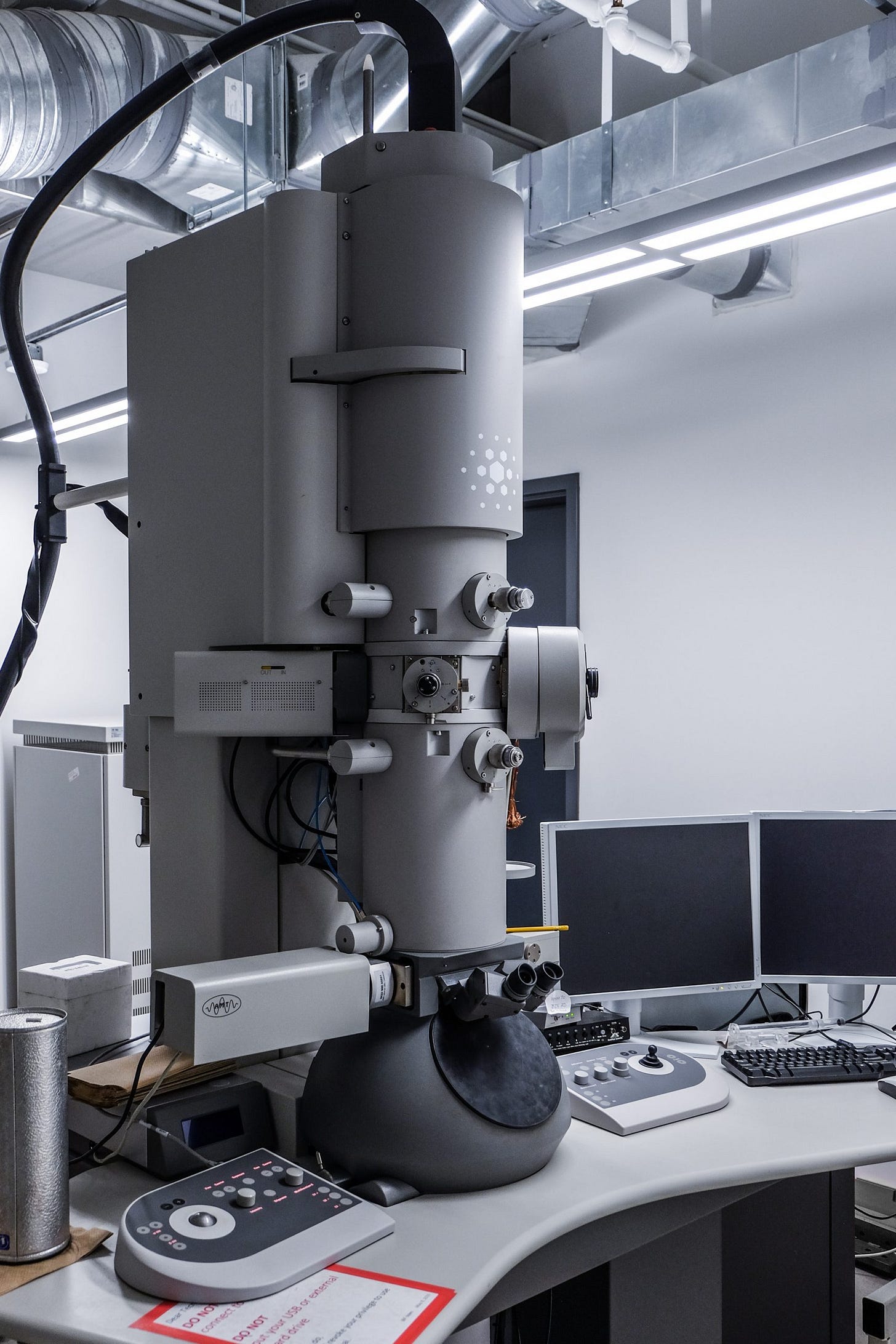

(1) put under an electron microscope the material that challenges their beliefs

(2) swallow whole the material that lines up with their beliefs

So before you attempt an RD, it might be useful to find out if they think that this phenomenon is OK and cool and normal.

You see this phenomenon all the time:

the people who watch—or listen to—right-wing media will put CNN’s output under an electron microscope, but these people will remain 100% blind to right-wing media’s problems and will swallow whole the nonsense that right-wing media feeds them

the people who regard the mainstream media as too biased to waste time with will put the mainstream media’s output under an electron microscope, but these people will remain 100% blind to their own sources’ problems and will swallow whole the nonsense that their own sources feed them

a person will put under an electron microscope the scientific studies that challenge the person’s beliefs—whether those beliefs are about global heating or Covid or nutrition—and will swallow whole the scientific studies that line up with their beliefs

There wouldn’t be very much ideological nonsense—and straight-up misinformation—in our society if people were universal skeptics instead of selective skeptics. In a world of universal skepticism, audiences wouldn’t fall for BS and wouldn’t spread BS, even though deliberately dishonest people would still be deliberately dishonest.

How exactly do you induce people to universalize their skepticism? I’m not sure—that’s a great question for the researchers who I plan to interview about rationality.

I think that two hallmarks of a universally skeptical society would be:

(A) that people would say “I don’t know” a lot more

(B) that people would express confident opinions much less often

Let’s hope that we can achieve rationality before irrationality kills us—it’s not too late.