Will We Understand Our Brains?

An interview with David Poeppel.

David Poeppel is a fascinating scientist—he’s Professor of Psychology and Neural Science at New York University; Director of the Department of Neuroscience at the Max Planck Institute for Empirical Aesthetics; co-founder of the Center for Language, Music and Emotion; and Managing Director of the Ernst Strüngmann Institute.

Poeppel is an extremely deep thinker, has a wonderful bird’s-eye view of his field, and—as a bonus!—is a hilarious person to talk to.

I was honored and thrilled to be able to interview Poeppel—see below my interview with him that I edited for flow, organized by topic, and added hyperlinks to.

Science

1) What do you love about science?

It’s like art—it’s one of the only activities that I’m aware of that comes with substantial intellectual freedom. I’m unbelievably spoiled and privileged in many ways, so not everyone in science has the freedom that I have.

I think that it’s a deep part of human nature to try to figure stuff out and to follow your hunches and your curiosities, and it’s joyful to do that. Whether you want to stare at a plant or look at the sky or measure something, I think there’s something deeply emotional and moving when you say: “I wonder what happens when you do this? And I wonder what happens when you look at that and when you turn it upside down?”

Science is also a social activity—at least the kind of science I do—and I get the greatest pleasure from working with these amazing students and postdocs that I have who aren’t yet as jaded and cynical as I am and who are fun and insightful and who know way more stuff than I do. They’re smarter, and they’re young, and they’re technically sophisticated, and they can do all the stuff that I don’t know how to do—“Wow! You can do that?” It’s such a privilege to be in my lab meetings with these people—whether the meetings are in Germany or New York—and discuss ideas or experiments or other people’s papers.

I get to talk with interesting, smart, funny, engaging, lively people about the cool stuff that we want to talk about. We have the ultra-luxury of just discussing ideas—that’s just amazing.

Probably the only similar experience I know is the arts. Like privileged professors such as myself, some very privileged artists experience the great joy of being able to do whatever they want to follow their hunches. It’s a different and much tougher situation when you’re a young artist or a young scientist who’s struggling to fulfill the criteria for success that’ll allow you to have that independence.

So science really is deeply satisfying in its own right.

Science is also a system of organizing knowledge in a way that moves us forward. As Max Planck said: “Insight must precede application.” And that applied knowledge is wonderful—it’s great to have anesthesia, be able to give birth without dying, and so on.

I’m convinced that the things that we understand least about the human mind and brain are the things that matter to us the most—language (which makes us who we are), the experience of emotion, and the experience of music are deep parts of human nature, but computers aren’t good at these things. Computers can do automatic speech recognition, but can’t help us with language use’s creative aspect; languages’ generativity and compositionality; emotional experience; and the almost supernatural experience of music.

2) I’m surprised that you went to those three examples, though, because basic biology is incredibly mysterious.

But I work on the human part of biology, so I’m not interested in the navel or the liver or the knee joint, but instead in how the mind is organized.

And I find most mystifying the three things that I mentioned because we don’t even know how to ask the questions in the right way—in Chomsky’s parlance, those things are all mysteries, not problems. In these areas, it’s not even clear that we’re in the ballpark of scratching the surface of an answer, and we might be going down the completely wrong path.

3) It seems like pragmatics presents a bleak brick wall to us and that we just can’t seem to move the needle on pragmatics. Science is beautiful when you have a lively field, and my own anxiety is that one day every scientific field will no longer be lively—John Horgan has a book called The End of Science. I want scientific fields to always be lively and always be moving the needle. Is your field lively?

Our field is super lively because we don’t know our asses from our elbows.

But a more charitable reading of John Horgan’s book is that we live in an intelligible world where science could end when different areas of inquiry increasingly align and we eventually generate unified understanding and systematic insight—science would become unified, and that’s not a bad thing, and that would be cool.

4) There’s a sense that physics was really booming in the 20th century and that biology is now the most exciting area.

The deep questions of physics become mathematics and are very difficult to sort out, and the parts of physics that are associated with measurements are also pretty difficult.

Biology has changed a lot because we have more insights into the parts and we have methodological tools and computational methods that allow us to look at things in a way that wasn’t previously possible at all.

On behalf of the National Science Foundation, I gave a talk about big scientific problems in Congress many years ago, and the two biggest problems that I could think of were the structure of the universe and then the structure and function of the human mind and brain. The first one is super interesting and incredibly exciting and so cool, but very remote from us and very abstract and very unlikely to yield immediate technological applications. The second one is really complicated, but it’s right here and it’s accessible and it has a direct impact on everything we do, so it’s highly relevant to everyone and we should be enthusiastic about studying it—it would be useful to understand what depression is and what bipolar disorder is and why language is the way it is, and we could and should answer these questions.

5) CRISPR was a wonderful breakthrough in biology, but apparently there are mysteries about plant biology’s most basic genetic pathways that give geneticists a lot of humility about the prospect of understanding human genetics.

I think there are grounds for optimism because there’s a sense that some of these things can be understood.

But in human neuroscience it’s not even clear how to do it, and that’s the thing that makes me nervous.

For example, you need to worry about the problem of storage when you study language—or when you study anything about cognition. You talked to Gallistel about this, and I think that Gallistel is absolutely on the right track there. I don’t know anything about specific proposals about molecular biology, but I think the logic of his argument is completely right—Gallistel is right to raise the flag and say: “The way our story goes right now is completely unsatisfactory.” But it’s very difficult for Gallistel because there’s so much political resistance because there are 1000s of careers hanging on the standard story of memory.

In my area of research a central problem is that everyone has a bag of 10,000 words or 100,000 words in their head—a mental lexicon—and I don’t even know how a single thing is stored in there.

We talk about things like Merge and compositionality and how you put things together, but we don’t even know what the basic stored elements are in the first place—what are the primitives? I don’t know, so I’m stuck, so I don’t know what the next step is supposed to be in this research program. And I work with smart people, and they’ve thought about this problem from many different angles, and this issue is a real showstopper.

So a lot of descriptive stuff in my field is maybe on the right track, but probably it’s “not even wrong”.

6) Isn’t Pietroski’s work supposed to move the needle on this stuff?

No—Pietroski’s work helps us to understand what kinds of properties we want to look for.

This is the kind of stuff that Paul Pietroski and Norbert Hornstein and Bill Idsardi and I used to discuss at lunch all the time: What are the linking hypotheses? Pietroski approaches this from the point of view of formal semantics, and Hornstein approaches the same problem from the point of view of syntax, and Idsardi approaches the same thing from the point of view of phonology, and I approach it from the point of view of neuroscience. And we hung out a lot together because we liked each other and had fun trying to figure out what the linking hypotheses would look like.

Pietroski’s internalist conception of semantics points you to a certain way to think about what an event is and what a primitive is. And Pietroski and Hornstein posit things about the kind of elementary operations to look for.

But then I have to say to myself: “OK, if you guys need those, and that’s the bottom line, then I need to be looking for that in brain tissue.” But I don’t even know how to begin to do that. So if Pietroski and Hornstein and Idsardi and Chomsky provide me with a certain parts list of what I need to look for, and I think that these scholars are on the right track, then I need to find the structures that implement the elementary “Lego blocks” that they’ve posited.

So that’s the challenge, and we don’t know anything about how to do that—notwithstanding the fancy papers that are always coming out that always skirt the really hard issues.

7) Why do you love your field of science in particular?

I think that working on the brain basis of language and emotion and music is the coolest thing to do because it’s what makes you you—it’s core to your identity. I use language, and I feel things, and I listen to music, and so these are the attributes that we share as humans that I’d like to know more about. These experiences are at the center of our personal existence as well as our political existence.

It’s also a lively field. There’s vigorous debate—if you’re going to get into this field, you have to be ready to take it and dish it out, and you can’t be too shy about stuff because it can get ugly.

In my field, you have to enjoy ideas and the playfulness of ideas.

And the field is also exciting technologically—the new neuroscience tools are remarkable from a purely engineering point of view, even though I’m on the record complaining about how neuroscience uses these tools. In the last 20 years, people have built a machine that can take a picture inside your head with a spatial resolution of a millimeter—that’s just an amazing achievement, even if we’re asking questions that are sometimes kind of lame.

To illustrate why I love my field, let me describe some research that sounds simple and banal but is actually really difficult, really fun, and really puzzling. I have two lab members in New York doing experiments on the topic of what happens in your brain just before you say a word—if you say the word “pencil”, the very last thing that happens before you say the word is you send commands to your lips and tongue and throat, and then “pencil” comes out.

But what happens before you send those commands? Do you actually assemble each individual phoneme? Or do you actually assemble each individual syllable? My colleagues’ experiments try to decode brain activity so that we can understand what kind of packages you put together before you say something. What are the packages? Do you assemble the packages at the process’s start or at the process’s end?

So even the most simple-seeming process like saying “pencil” has a large number of steps and subroutines that lead up to it. They’re doing it one step at a time and trying to figure out the size of the units that you use.

Some linguistic theories have suggested that there’s a “mental syllabary”, which means that your brain stores and pre-compiles syllables that your brain is ready to fire off whenever you send a command—that would be interesting because that would have to be language-specific and frequency-dependent, where the latter means that the frequently used sequences are more available than other sequences.

Exciting Projects

1) What are the most exciting projects that you’re currently working on and why are these projects exciting?

You shouldn’t work on any projects that don’t excite you—every project you’re working on should be exciting.

You should do bold things, but at the same time you need to do normal things in order to eat and pay your rent and pay your health insurance—it would be irresponsible to do only bold things.

I have two labs where people work on lots and lots of different topics.

Among the topics that I’m currently thinking about, I’m most excited about this question: What does it mean to store a mental dictionary? This question is very closely aligned with the questions that Gallistel asks, except operationalized in a very specific way—I’m not asking about engrams in general but instead the thing that I know that everybody has in their head: a bag of words. Or maybe it’s not a bag—maybe it’s a box. [Laughs.]

I can teach you a new word right now. See this thing that you always play with? [Flicks the flickable thing on his pen’s cap.] That’s called the “blicket”.

2) Really? I didn’t know that. [Laughs.]

It’s not—I just made it up. “Blicket” is a classical non-word that—I think that Lila Gleitman introduced it. And I made up this meaning for it.

But now you have a lexical entry that pairs the phonological form with the meaning that I just showed you, and you’re never going to forget that sound–meaning pairing. And I could write it down and then you’d have a sound–letter–meaning association. And I could sign it for you with sign language, or whatever.

So what does it mean to have that kind of information? How is the information encoded? What is the nature of encoding information so that you can store something and then—instantaneously—pull it out and recognize it and put it together with another thing? I’m starting to think about how to do experiments about this topic.

Lexical items are really rich and complex, so it’s not like the word “cat” just means “fuzzy thing with four legs” or something—it’s really complicated. I’m doing experiments right now on the word “not”, and even simple negation turns out to be pretty hard, so once you move up to things like “house” and “river” it becomes even richer and even more complex.

I’ve also been working for a long time on what I broadly call the “temporal structure of perceptual experience”—I want to investigate the temporal primitives of experience.

I find it interesting that human spoken communication is really quite fast—it’s about 4–5 syllables per second on average. And surprisingly, that rate holds across languages and across speaking styles.

I find it particularly interesting that the following things all happen at the same rate: human spoken interaction, eye movements during reading, whisking (something that rats and mice do), visually exploring a scene, other perceptual tasks, and other cognitive tasks. There’s something very fundamental about the way that the brain’s circuitry packages information to make the Lego blocks that you deal with.

3) To what extent is money a limiting factor in your experiments?

I struggle with funding in New York, despite that lab team’s remarkable productivity.

But in Europe it’s a bit different because they’re generous in funding basic research.

4) What are the most exciting projects that you know of that others are working on and why are these projects exciting?

To some extent, the most exciting things are the things of the future—things like storage.

But I’m excited about my labs’ experiments about elementary structure-building: How do you take two things and stick them together so that the third thing comes out? A lot of people in neurolinguistics—including myself—work on that topic.

Famously, linguists all agree that constituency is a real feature of language representation and processing—even anti-generativist researchers agree that constituency is a real property that requires explanation. I think that we actually have convincing neural evidence about how constituent structures are built.

My own—unpopular—belief is that syntactic operations are actually the easier problem and that we can actually expect to make a ton of progress in the next 20 years on questions about concatenation and set formation and so on, whereas lexical storage is much harder to deal with. Operations are easier to measure—we can do experiments that show when this or that is active and when you’re concatenating something and so on, though we need to improve our experiments on that front. But in contrast, the notion of storing things and pulling things out of storage is a much more difficult problem—we don’t know how to do those experiments.

So both are really important and exciting, but in Chomsky’s parlance one of them is a problem and one of them is a mystery—for me the grammatical processing part is a problem, but the storage part is a mystery.

5) Pietroski told me that you can use systems that you understand to try to get leverage on systems that are more mysterious.

That’s the idea—in physics, they used things they could observe and measure (like photons) to make inferences about unobservable things (like quantum mechanics).

One very bold idea would be to branch out and see if the notion of storage—and even conceptual storage—could be studied in animal models in a way that would inform us from a totally different angle. Animals have memory for very abstract conceptual things, so you could abandon human-specific research programs and ask: What does it mean to store anything abstract? And we can do different experiments on animals from the ones that we can do with humans, so there are more possibilities with animals.

Technology

1) It’s definitely humorous to see the articles in the New York Times that include images of the brain, since those images might give laypeople the sense that these scans actually explain something.

The electrophysiological methods give us great temporal resolution:

And the neuroimaging methods give us great spatial resolution:

But my view is that we have bad conceptual resolution—our ideas are bad.

However, the fact that I can stick you in a machine in the room next door and measure stuff inside your head is wicked—100 years ago if you’d told them that that would be possible they would’ve told you that you were insane.

The resolution is getting better and better, and it’s exciting for basic research, and it goes without saying that it has major clinical applications for stroke patients and for people who suffer from neurodegenerative disorders, epilepsy, affective problems, and other clinical issues.

2) To be clear, though, this technology hasn’t led to much insight, has it?

I think that’s too harsh—we can say that among friends, but these techniques are 20 years old or newer, and the technologies are developing rapidly. There are many important insights, and there’s hope for many further important insights, but we just need to enforce high standards of experimental design and make sure that we’re asking the right questions.

It can be difficult to use data from this new technology to adjudicate between interesting theories in linguistics or cognitive science or psychology, but even there there’s progress if the questions are operationalized in an intelligent way. For example, my colleague Alec Marantz has used electrophysiological techniques like magnetoencephalography to show—through really interesting and elegant experiments—that morphemes are essential units. So this work shows that theories that say that everything is lexicalized are wrong and that theories that say that there’s morphological decomposition are right:

“Modeling Morphological Processing in Human Magnetoencephalography” (2020)

“Morphology and the mental lexicon: Three questions about decomposition”

3) How much will resolution improve—and will that improvement really help to move the needle on anything?

Temporal resolution is already arbitrarily good for electrophysiological methods. Spatial resolution will continue to get a little better—the physics and engineering will continue to be developed, and the data analysis approaches will continue to improve.

From a physiological perspective, my own hunch is that there will be a big opportunity for new insight when we reach the same kind of experimental resolution that animal experiments have.

To imagine the human cortex, imagine two circles—one circle for each hemisphere. And imagine that these circles each have the area of a medium pizza. And imagine that each of these circles is made of three-millimeter thick salami. And then imagine that you crumpled each circle up.

We’ve known since the start of the 20th century that the primate cerebral cortex is organized into layers—the mammalian cortex has six layers, and there’s something really deep and useful about those six layers, since those layers are highly conserved.

But we don’t know what exactly those layers are for, though scientists have hypothesized that these layered structures’ wiring diagrams might serve to execute computations like predictive processing:

So maybe something quite game-changing could happen once we reach that level of resolution, and then we can maybe shed more light on the question that I’m always asking: What is the parts list—what are the elementary Lego blocks?

4) Isn’t our parts list obviously not complete and obviously not correct?

Yeah, you’re absolutely right—biologists and cognitive scientists who claim otherwise are being too optimistic. Maybe in 10 years we’ll find out something like: “Aha! When five cells are together and wired up like this, that’s the primitive Lego block!” We just don’t know.

5) Psychiatry is in the Dark Ages—they can’t even tell you the neurological basis of depression or bipolar disorder! It’s brutal how they can’t even explain the most basic things about the various horrific disorders that people are suffering from!

Part of my new job in Germany is to stimulate the connection between systems neuroscience and mental health issues—the connection is woefully inadequate right now, but many people are trying hard to move the needle, since everyone understands how significant these issues are.

Good Experiments

1) Why is the 2017 paper “Neuroscience Needs Behavior” a must-read?

People might see the title and think that the paper has something to do with behaviorism, so a better title would’ve been something like “Neuroscience Needs Careful Analysis of Cognition and Behavior”, but we needed a short title.

Our point in that paper—which is kind of banal, but I think important—is that no matter what creature you’re studying (humans, mice, marmosets, whatever) you want to very carefully specify the behavioral or cognitive question that you’re asking and then approach the neuroscience experiment with that question in mind.

If you’re studying barn owl sound localization, be really clear about what exactly that phenomenon is and how it’s solved, and try to really decompose the phenomenon, and try to carefully characterize it. And then approach the experiment with that in mind once you’ve done that meticulously.

You have to really understand whatever the domain is—if your experiment is about word recognition, you have to really know something about word recognition.

We’ve kind of lost this tradition over the years, but this was a really critical part of the ethological research of the 1950s, and people like Tinbergen and Lorenz did an amazing job at analyzing the natural behaviors that creatures would do in their natural habitats. We stick people into labs and they sit in a chair and move a joystick or something, but you ultimately want to understand what the creature does in its natural habitat.

This analytic process will sharpen your hypothesis. I wrote a paper with one of my grad students where we argue in response to György Buzsáki’s The Brain From Inside Out that you always have a hypothesis—even if it’s implicit—and that it’s crazy to think that you could ever just characterize hardware:

“Against the epistemological primacy of the hardware: The brain from inside out, turned upside down” (2020)

You won’t get anywhere with just an implementational level of analysis without any hypothesis about function—if you open a car and look at the engine, you can look at the engine parts and draw the parts and measure things, but no matter how much you study the engine you won’t know how the engine works. And if you think that you have no hypothesis about function, you’re kidding yourself because you’re bringing an implicit hypothesis to the task at hand.

Your story will go nowhere if you don’t have an analysis of the cognitive science—in our 2020 paper, we discuss the need to comprehensively analyze an ethologically motivated behavior that we’re trying to explain.

2) What is “conceptual resolution”, and why do we need better “conceptual resolution”?

As I use the term, “conceptual resolution” is about how much we’re able to decompose the concepts that we use in cognition and neuroscience in a way that’s well-motivated and sensitive to evidence—are we using the right concepts and are we treating them with the analytic care that they deserve?

If we have good conceptual resolution, that’ll help us to take the parts list of linguistics and cognitive science and the parts list of biology and neuroscience and then find principled links between the primitives of cognition and the primitives of the brain.

You don’t need better equipment to find the linking hypotheses—you need better conceptual resolution so that you can zoom in and out on the ideas in a way that could ultimately reveal how to find the linking hypotheses.

Look at the unification of chemistry and physics—physics could no longer deal with what was going on in chemistry, so physics had to conceptually reorganize and come up with new stuff in order to handle the weird new chemical phenomena. You had the parts list of physics and the parts list of chemistry and a bunch of phenomena, and nothing aligned at all until physics was able to say: “Wait a minute—if we change a lot of stuff, we’ll suddenly be able to align.”

3) Pietroksi told me that he wants to eventually do experiments to shed light on what computational resources the human child brings to the table. He says that there has to be an answer to this question, and he says that that answer would teach us something important about cognition and natural computation.

I’d have to discuss this with Pietroski directly—actually, it would be fun to talk to Pietroski about this!

You should invite people together and do group interviews!

4) That’s a great idea!

But the question about computational resources is: With respect to what task? So maybe Pietroski wants to know what a learner brings to table with respect to universal quantifiers like “most” and “every” and “none”, and then you could ask a question and see the learner execute behavioral tasks, and then you could infer from that what kind of grammar underlies that performance—is it context-free or context-sensitive or mildly context-sensitive? (The party line on human language is that it’s mildly context-sensitive.)

You could likely answer the question, but I’m not completely sure whether it would be an interesting answer—it could be descriptively adequate at some level of abstraction, but I’m not so sure that I really need to know the answer in terms of explanatory adequacy.

I’m a neuroscientist, but I self-identify with the way Pietroski goes about things (for meaning) and the way Hornstein goes about things (for structure) and the way Idsardi goes about things (for sound)—we all share the notion that you want to find the ontological primitives. For Pietroski, those primitives could be certain very basic operations that a semantic system needs—I always found that approach very appealing because he’s saying: “In order for you to do this very basic semantic process, you need operation A and B and C.” And likewise people like Hornstein say: “To get any kind of theory of syntax off the ground, you need A and B and C.” So that appeals to me—they’re trying to be decompositional in the same way that I’m trying to be decompositional.

I really cherish my discussions with those guys—at the bagel place we would go to—where I’d ask: “What can you not live without? If I took away all your machinery and your lambda calculus and your whatever, what is the smallest set of things that you could build a full semantic system or a full syntactic system from?”

Those are the kinds of discussions we would have, and after those discussions I’d walk away saying: “OK, if that’s really necessary on logical and conceptual grounds, my job is to figure out what a nervous system would have to be able to do to accomplish that.”

This business where you find the ontological primitives is a very particular way to do research—you search for the metaphysical basis of what we are, and then you find linking hypotheses between those primitives and neurological primitives, and then you do experimental work to try to get somewhere. I think that it’s a very useful way to approach things because I respect their evidence and they respect my evidence and it’s a problem of struggling toward alignment.

When you read a paper about the brain and language, first ask yourself: “Did you learn anything about neuroscience?” Rarely. And then ask yourself: “Did you learn anything about language?” Rarely. And then ask yourself: “Did you probably learn nothing?” Most often. So that’s not cool!

5) What’s the better outcome? That you learn something about both?

That’s the aspirational outcome that’s almost never true—you almost never do an experiment that’s insightful about both biology and linguistics. But we know a lot about language from psycholinguistics and computational linguistics and linguistic theory, so the more likely outcome is that you’ll take sophisticated and interesting linguistic models, and then incorporate those into your experiments, and then learn something about how the brain works and how the brain parcellates things and how things interact in the brain on different scales.

And the reverse of that isn’t a thing yet—you don’t study the brain and then say: “I now understand the difference between antecedent-contained deletion in this language versus that language!” So it’s more likely that you’ll use language to learn about the brain than that you’ll use the brain to learn about language.

6) In your field, how much is coming up with clever experiments a limiting factor?

I think ideas are the limiting factor in all scientific fields—the limiting factor is always poverty of the imagination or conceptual resolution.

In our field, we have great machines with fantastic spatial resolution and fantastic temporal resolution, but our conceptual resolution sucks, so other than money the limiting factor is coming up with clever designs that test clever ideas. There are lots of experiments, but 99% of them are bad, or tell you nothing, or tell you about something that you didn’t even want to know about—the really insightful experiments are few and far between because it’s just very hard to do a good experiment.

7) Who came up with this fascinating entrainment experiment?

It came out of team discussions led by Nai Ding and Lucia Melloni—these things always come from teams sitting around, and knowing the question, and knowing the literature, and coming up with plausible things so that you can actually successfully scale the experiment up to what you’re able to measure. You have to be able to say: “If X were true, we would see Y.” But Y has to be something that you can actually measure and quantify, or else it’s no good.

The experiment that you’re talking about allows you to take a glimpse into how the brain—independent of the stimulus stream—operates on simple linguistic representations. You do abstract manipulations and see the signatures in the brain, but those abstract manipulations aren’t in the inputs at all. So that’s a decent experiment and a nice result.

8) During these team discussions, you always anticipate potential objections from imagined reviewers, correct?

That’s part of experimental hygiene. In my labs, we first try to do the experiment a bunch of different times—forwards and backwards and sideways and upside down—to make sure that we’re really doing what we think we’re doing and that the empirical findings are totally robust.

And then we start posing alternative explanations and carving away at them and saying: “Could it be this?” So you have to do this other experiment. And then you say: “Could it be this other thing?” So then you have to do a new experiment.

In the experiment you mentioned, we had to rule out the potential effect of acoustics. And then we had to rule out the potential effect of statistics. And then we had to make sure that it worked across languages. And then we were left with the conclusion that the most likely explanation was that you actually build abstract structures in your head—abstract representations like constituent structure.

The deep irony of that result was that linguists have known for probably 2000 years that you do that in your head, so we were using multimillion-dollar equipment and a team of smart engineers to show a point that you can get for free in a linguistics class. Linguists will sometimes ask me: “Wait, you needed to do this huge complicated experiment to show that there’s constituent structure?”

But the more charitable view is that it’s important to be able to show how these phenomena are implemented, and how the brain does these operations, and which mechanisms are involved.

9) That experiment wouldn’t shock Chomsky, right?

Ironically, that paper actually appeared on 7 December 2015 on his birthday:

10) Did you ever put people with disorders into that experiment?

I haven’t, but other people are actually doing that right now. Many labs are now doing experiments based on that experimental paradigm—it’s a very useful and flexible paradigm.

A Chip for Italian

1) Do you have a dream experiment that you would do if money were no object?

I have a thought experiment where I’d insert a language into a brain—I could inject a bag of Italian words into your head and then you’d be bilingual. If I knew the exact parts list from a cognitive science perspective and the exact parts list from a neuroscience perspective, I could code up a language like Italian or something and stick it in your head. If I understood from a mechanistic point of view how information is stored and how operations are done, it would literally be like a computer chip that I could put into your head.

2) But words are always linked to memories and associations.

Yes, but at some point there has to be an interface to memories or to the concepts that underpin memories and associations. To know a word is to know a bunch of things—sound features, letter features, meaning features—and as you and I have this discussion, we go back and forth and actualize these things at the sensorimotor surface, so there has to be some code that allows for words to come in as sound and go out as sound. You just read the phrase “dream experiment”, and then you translated it into some code that relates to some ideas or concepts in your head, but when you said that phrase it came out as sound. So that phrase’s format had to include letters, the ideas themselves, and sound. And so the code must be very abstract, and must be usable in real time.

There’s a really, really important part of the brain—and there’s likely a network involved, rather than just one part—that sort of compiles all of the information from different sources. And those sources could be auditory, semantic, motor, or whatever else. And in some sense, that compiler’s properties have to be “shared”, since that compiler has to execute the same operations across different brains housing different languages and generate the same type of neurocomputational primitives. And if I understood how that compiler operates, I could make an “Italian chip” and put that chip right in your brain.

This couldn’t ever happen, but we can extract from this thought experiment some ideas about the nature of the representations and computations that constitute the language system.

3) But if you put a chip for Italian in my brain and the chip included Italian words for kinds of food that I’d never seen or smelled or tasted or whatever, what would those Italian words link up with inside my brain?

You would have to map those sound sequences or letter sequences to certain concepts. Learning words is like a prediction—each word is like a theory that you test. Words appear to you in some frame, and then you know from the frame that the word is probably an adjective or whatever, and then you perform tests to find out the right meaning based on the context.

It’s just a thought experiment because to do that experiment would mean that you know the code that stores things and the code that does operations on stored things.

I’ve internalized from cognitive science that it’s all about representations and computations over representations, so you need to put your money where your mouth is: What are the possible representations and what is the possible suite of operations? You have to put your cards on the table: What are these representations’ formats given that we read them, see them, hear them, and use them in the various ways that we do? There must be a very abstract code, and understanding that code would be a pretty deep insight into how brains and minds are organized.

In such experiments, we normally take people who speak two languages or four languages and we try to figure out what knowledge of multiple languages means in terms of neural organization. And that research is often really interesting.

But in my view that research hasn’t gotten us very far in understanding either how language works or how the brain works, so my thought experiment flips it around and asks if we could ever know enough about the system to take a speaker of language A and then insert language B (so that they’re bilingual) and language C (so that there’s trilingual) and so on.

And the fact that such an experiment is impossible is also informative: Why is “cognitive insertion” impossible—which conditions make “cognitive insertion” impossible?

Small Brains and Large Brains

1) You’ve got stuff like Merge and Pietroski’s “semantic instructions” and so on. Some neurological machinery must underlie these things, correct?

Right.

2) And the ultimate goal is to explain these things in terms of neurological machinery, correct?

Right.

3) But we don’t yet know the neurological machinery that underlies the various navigation-related computations that a tiny insect brain performs, correct?

We’re getting better, but that’s a similar challenge.

4) So you can imagine that first we’ll figure out the neurological machinery that underlies certain computations in the insect brain, and then we’ll work our way up.

You choose a model system very carefully in order to answer the specific question at hand—some species are useful for studying one thing and not useful for studying another thing, and certain aspects of representations and computations might be best studied in non-human models like crickets or turtles or rhesus monkeys.

It’s not the case that you need to study simple systems and then graduate to so-called higher systems—that’s not necessary.

5) But I thought the computational and the neurological would first be linked in the bee, since its brain is so tiny and you can do invasive experiments.

The bee might be useful if you’ve articulated an extremely specific question about range-finding or direction or azimuthal location or something, since you could then figure out how a small nervous system might implement a certain kind of computation.

Sound localization is a great example because it’s been worked out from soup to nuts in species like the barn owl. And that’s instructive, but it’s instructive about exactly that set of tasks, and it’s not instructive about—for example—word recognition. So you have to take the tasks that you want to study, break the tasks down into their ostensible primitives, and then figure out how those primitives would somehow link to action and storage in the nervous system.

You have to be parochial—certain primitives might be linked in the bee, but only for the bee. Two major reasons to be parochial are degeneracy and multiple realizability—the same task can be executed a bunch of different ways, so a bee can tell another bee where something is with the waggle dance, but I could use language to tell you where something is and that would accomplish the same task.

You want to select ethologically relevant tasks for a creature that has the relevant neural computation—as that 2017 paper argues, neuroscience that’s addressed to understanding behavior is bankrupt and boring and just noodling around unless you actually have some theory or hypothesis or model when you investigate things:

6) I’m glad to hear that—I thought that barriers to understanding insects would imply even greater barriers to understanding larger brains.

That’s not the case—for example, we can learn a ton about oscillations and time constants and low-level cellular phenomena from looking at crickets and other animals in which they’ve really worked out the relevant circuitry, but properties like multiple realizability make this inapplicable when it comes to other animals.

Scientists From the Year 3000!

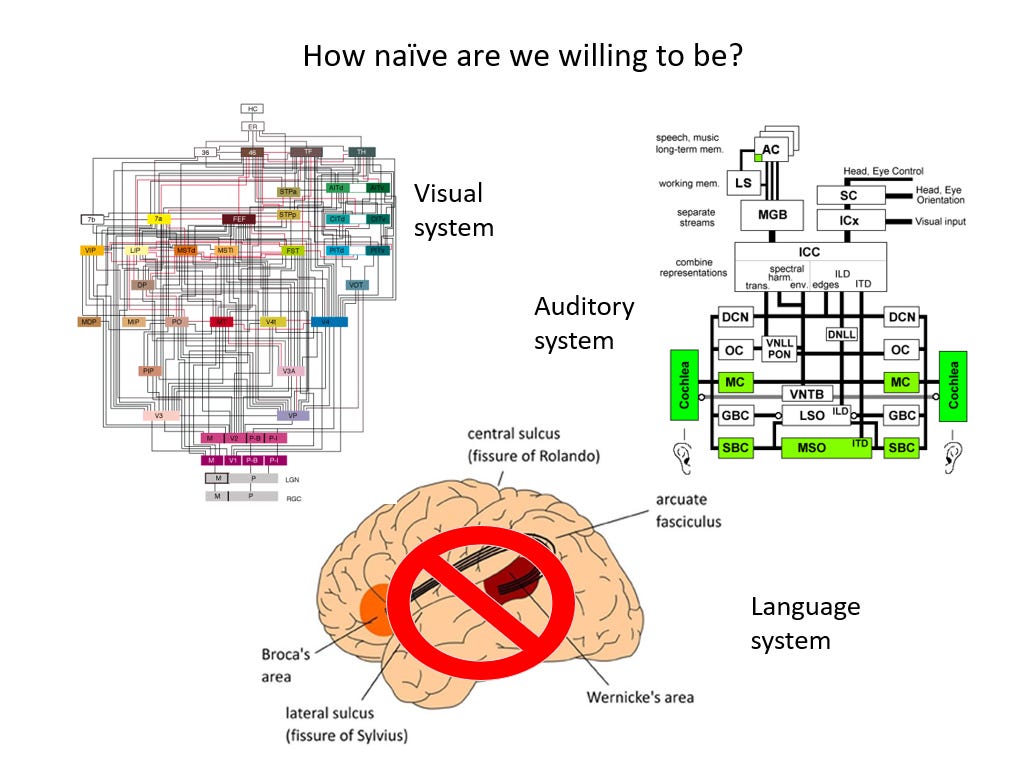

1) Imagine that scientists from the year 3000 came to the present. Suppose that they showed you what the actual diagram for the language system looks like. Relative to the diagrams for the visual system and the auditory system, how complex would the diagram for the language system be and what would the diagram for the language system look like? This slide from your excellent 2018 talk shows three diagrams and the point of the slide is that the “Language system” diagram is preposterously simple as compared to the “Visual system” diagram and the “Auditory system” diagram:

That’s a good question—I like that question.

First of all, it would obviously look vastly more complicated. In 1000 years—or maybe even in 100 years—it’ll look way more complicated.

The brain is a small place, but it’s also a big place. The current estimate is that the brain has about 86 billion neurons and that 15–16 billion of these neurons are in the cortex. And each individual cell is a super complicated beast in its own right, as you know from your discussion with Gallistel. Moreover, each cell talks to at least 1000 other cells, so we don’t have the kind of formal apparatus to even characterize what’s going on. We’re talking about incredibly high-dimensional and abstract and dynamic spaces—it’s a giant and highly recurrent system made up of billions of elements that we don’t yet understand.

So this is a question of the parts list again. I can confidently say that our parts list in 2050 will be completely different from our current parts list—we’ll know way, way more. The parts list will turn out to be significantly more complicated than what we currently understand and will have very different dynamics than what we currently understand.

The question is how much better our cognitive science parts list and our linguistics parts list will be in 2050. I’m a big fan of linguistics and cognitive science, but I have colleagues and friends who think that it’s all a bunch of speculative folk psychology.

György Buzsáki is one of my friends here in New York, and he’s one of the most famous neuroscientists alive—he argues in The Brain From Inside Out that we should just look at brains and that we’ll learn psychology from looking at brains. My position is that that’ll never work because it’s never worked before in science—the history of science tells you that you can’t look at the implementational level (in Marr’s sense) and then expect function to somehow explain itself.

But György’s point is that psychology is bankrupt and that concepts like “attention” and “memory” and “learning” aren’t very helpful anymore. My perspective is that we shouldn’t get rid of cognitive science or psychology, but instead we should take those concepts and then be more decompositional and philosophical and analytic—we should be cleverer in figuring out what these things actually mean and how we can decompose these concepts into constituent components and subroutines. Then we can map these carefully decomposed parts onto the brain instead of mapping coarse-grained concepts onto the brain.

To take an example, there’s no such thing as “vision” in the brain—“vision” is made up of dozens and dozens of areas and operations and representations. And likewise there’s no such thing as “language” in the brain because language isn’t a monolithic thing—there’s pragmatics; word recognition; the use of quantification; syntax; phonological knowledge of syllables; and so on.

2) You mentioned the unification between chemistry and physics—in that case, the lower level changed in order to unify with the higher level. I guess that you can change the lower level, or change the higher level, or both.

I think that it’s driven by particular ideas and theoretical constructs. There’s no putative “lower level” anymore, and no putative “higher level” anymore—an idea just comes along and says: “If you adopt this idea, at this level of description, I can explain all these phenomena.” So there’s no down and no up, and stuff just unifies and—in an abstract sense—belongs together.

We have great evidence for the primitives of linguistics, and we have great evidence for the primitives of neuroscience, but we have no plausible linking hypotheses to tell us the neurological or biological signature of a morpheme, or of a syllable, or of what it means to do a concatenation operation.

3) Imagine that the scientists from the year 3000 showed you and Chomsky their scientific papers that explained the neurological machinery that underlies Merge. Would you be absolutely floored to read those papers? Would the answers drop your jaw or just underwhelm you somehow?

[Laughs.] Hopefully we’ll know way more in the year 3000. But I’d mostly be surprised if the character of the answer were actually intelligible to me at all—or to Chomsky, for that matter, though maybe it would be intelligible to Chomsky—because I assume that the state of knowledge and the way that the systems are characterized and our tools will be so different that it would seem utterly foreign.

I’d be delighted to see an answer, and it would be amazing to see it because maybe it’ll turn out that the literature’s characterization of Merge was wrong or that some other operation—or set of operations—does what Chomsky thinks Merge does.

4) Yeah, imagine you went back to the days when physicists were confused about chemistry and you showed the confused physicists the quantum physics that explains chemistry—they wouldn’t know what the hell quantum anything was! It wouldn’t be helpful to show them that because it would be gibberish to them!

It would be “incommensurable”, so the ideas that are used to explain stuff might be of such a different character that they’d be like: “The fuck?”

Abstract Structure

1) Since your excellent 2018 talk, how much progress has been made on the topics addressed in that talk?

In the sense of Marr, there’s a computational aspect and an implementational aspect. Brain oscillations play an important role as a temporal organizing principle for all sorts of brain activity, so it would be very weird if this weren’t also true for language, and my labs and other labs have done a lot of work on the way that language isn’t an exception in this regard. Many people have taken up that work vigorously, both pro and con, so the needle has been moved quite a bit on that front since 2018.

There’s increasing empirical and theoretical and computational work testing whether language processing makes use of these oscillations. I myself am focused more on speech perception tasks and lexical recognition tasks, and others have focused more on syntax and structure-building, and there’s pretty good reason to believe at this point that this is on the right track. It’s a very big research area, and I’m very happy that I was able to make a contribution to this work.

I think that the needle has moved in this area of inquiry because so many people have pursued research on neural oscillations, and so many people have examined our work from both a skeptical and a supportive perspective, and that level of attention has enriched the field. So people have advanced increasingly detailed models, the experimental work is increasingly sophisticated, and people are doing this work with different analytic techniques and different experimental designs and different languages.

That’s the implementational level, in Marr’s sense. Since 2018, there’s also been lots of activity and very healthy progress on the front that includes the notions of structure-building and structure dependence, since more and more people are using all of the techniques available to look at the human brain and ask: How do you actually build a structure?

Some of our experiments have been shown to be partially right and partially wrong, and there continues to be vigorous debate, but my own view is that the evidence is absolutely incontrovertible that structure-building is essential.

2) So have there been big headlines saying “CHOMSKY IS PROVEN RIGHT BY EXPERIMENTS”?

Our 2015 paper got a lot of attention and spurred a lot of work:

And the blogosphere was like: “This shows that Chomsky was right!” Many follow-up studies have argued either in support of that view or challenged it—it’s an ongoing debate.

But the more popular view in the field is that Chomsky is wrong because deep neural networks tell you that you can do everything with statistical contingencies derived from big training data.

3) Why is it surprising or interesting or important that cortical activity is “entrained to the phrasal and sentential rhythms of speech”?

The experimental results in the 2015 paper are interesting because the relationship between the stimulus and the brain signals shows that there’s entrainment to something that’s not there in the stimulus—you entrain to an abstract structure that you have to construct in your head.

And that something—that’s not there—is constituent structure.

4) Why is it surprising or interesting or important that the brain builds discrete abstract units that interact with one another based on rules? Chomsky would be like: “Yeah, of course.”

Every linguist would say “Yeah, of course”, so it’s not an interesting claim about linguistics, but from the perspective of processing in the brain you do in fact have to do the experiment to show that this actually happens and that this isn’t conjecture.

The experiment also shows how this happens, shows which regions are involved, and gives some initial hints and tentative explanations about what the underlying processing machinery and underlying mechanisms might be.

5) Why do neuroscientists and engineers not like the idea that the brain builds discrete abstract units that interact with one another based on rules?

Our work on entrainment has helped stimulate a whole cottage industry of arguments pro and con, actually.

First, people have an intrinsic dislike of claims that Chomsky has made over the last 60 years—this dislike is surprisingly often based on hearsay and not based on having read the technical details of Chomsky’s work, and Chomsky’s tough personality contributes to the dislike because Chomsky has pissed off a lot of people.

Second, it’s very popular to claim that we can now infer everything from big data and that our modern approaches—based on neural networks—can do everything without appealing to properties like abstraction or structure.

Deep neural networks have really enthused people—including people in my labs. That approach is very powerful and impressive, and it’s cool that you can see something seemingly very weird happen in silico on your computer. But people make an odd logical leap from showing that trained-up networks are impressive—which they absolutely are—to thinking that that’s how human brains work. And my job is to point out: “Yes, I also think that that’s really cool, but my day job is to figure out how human brains and minds work, and that’s a rather different kind of story.”

Remember that humans can learn things on experimental trial number one, learn complex ideas from sparse data, extrapolate insights that were never in the training data, and say things that they’ve never heard before. And remember that humans have generativity and compositionality. So scientists working on human cognition might look over and see what can be learned from huge statistical models, and we do learn things from those statistical models, but it simply doesn’t follow that that’s somehow the answer to how human brains are organized.

Third, you need to make a sort of leap of faith that cognitive science’s primitives are just as solid as other pieces of evidence.

But these claims come up almost only in the language domain—there are other aspects of cognitive science (high-level vision, motor control, music processing, memory, and so on) where the same arguments could obtain, and yet people for some reason only get high blood pressure when it comes to language. In domains other than language, people’s reaction is: “Yeah, it’s all good.”

6) Is behaviorism still a thing?

It’s not called that anymore, but it’s of course the biggest thing still—it comes back every time there’s a wave of new computational tools.

Those of us who do biology are of course deeply committed to the idea that biology is very rich; that brains have lots and lots of built-in structure; and that brains are really, really complicated devices fresh-out-of-the-box. So we’re all of course impressed that you can learn a bunch of stuff with statistical models, but we’re equally impressed by the fact that a human comes with a six-layered brain where every cell somehow knows where it has to be!

As far as I can tell, dualism is innate, so people reason differently about bodies and minds, and it’s almost impossible to think about how your mind is organized in the same way that you think about how your knee or liver is organized. But why should different rules apply to your kidney and your brain? Why think about your mind/brain differently from the way that you think about your heart and your guts and your knees and your toes?

In the academic domain, all of this implicit dualism leads to unusual discussions, but I think it’s a natural—and maybe useful—way to think about stuff in the folk domain. Iris Berent’s interesting 2020 book The Blind Storyteller discusses the way that people reason about human nature in the folk domain.

7) What is the explanation for why syllables happen at a rate of 4 and 5 Hz across all languages? That seems so arbitrary—why not 3 Hz or 6 Hz?

Here we’ve moved the needle—here we have an example of a somewhat successful linking hypothesis:

Some languages feel faster, and some feel slower, but these are very, very small deviations, and—when it comes to the physics of speech—language’s mean rate of change is a period of about 200 milliseconds.

And we know from linguistics that the mean syllable rate across languages is the same thing: 200 milliseconds.

And it turns out that one of the brain’s fundamental time constraints is about 200 milliseconds—that constant is encoded in brain oscillations called the theta rhythm, and this attribute holds across species and across domains. This time constant is just the fundamental rate at which all brains do perceptual and sensory motor processing—it doesn’t matter whether it’s eyes, whiskers, ears, mouths, or whatever.

There’s ultimately a biophysical explanation for this time constant—you take a bunch of cells that are made up of channels and molecules and so on, and you stick them together, and for biophysical reasons you get something that “breathes” at this particular rate:

Specificity

1) Can highly specific and bizarre disorders shed light on things?

Yes, deficit–lesion correlation has given us a lot of insight. Alfonso Caramazza has done really fun and interesting work in which he’s found truly weird cases of dissociations—for example, one patient could write (but not read) verbs and could read (but not write) nouns:

These are highly specialized cases, so you have to take them with a grain of salt and you can’t generalize from them, but these cases generate hypotheses about how things might be organized.

And for example, there are patients who specifically struggle to name living things, so the distinction between what’s animate and what’s inanimate seems to be another dimension along which human minds/brains organize knowledge:

2) Can they name viruses? Apparently there’s hot debate in biology about whether viruses are living.

I think that’s specialized scientific knowledge, not folk biology—it’s like asking whether a tomato is a fruit or a vegetable.

3) I was just about to ask you about tomatoes! This could be an urban myth, but I heard that someone was in a skiing accident and they had a brain bleed and then they couldn’t name vegetables, and so I was curious about whether they lost the ability to name tomatoes.

This is another very fascinating area of research: the categorical organization of knowledge. The most famous case is face perception—you can have an unfortunate brain injury where you become unable to recognize and process faces but your visual system is fine and everything else is fine. So it’s fascinating that that piece of knowledge is organized in that particular categorical way.

My favorite one is pure word deafness—it’s very rare because it requires you to have a very particular constellation of brain injuries, and it makes it so that you can’t process speech, so that speech sounds to you like noise or like wind, and yet tests will show that your hearing is just fine. And it’s not that you’ve lost language—you can still read and speak, but you speak haltingly, since you’re not getting any acoustic feedback from your own vocalizations. But that means that there’s a single dissociation between hearing, speech perception, and language processing—these are different mental (and neural) operations that can be dissociated.

4) How many different parts of language are encoded in the brain? This blows my damn mind! Does the brain actually separate nouns, adjectives, verbs? What about adverbs? What about prepositions and articles and conjunctions? They teach you these “parts of speech” in school, but you’re telling me the brain actually has all these categories?

Well, where else? Is it going to be in your liver?

5) [Laughs.] But those exact categories? Including articles and conjunctions?

By and large, those are natural classes. Nouns and verbs are cross-linguistically attested. 2000 years of experiments show that you use these categories, and the brain is the body part that does the using, so there must be some representation of that in your head—the questions are: What’s the nature of that representation, are we carving it up right, and is something like “adverb” the right kind of category?

6) Let’s say someone has a brain injury and they can’t read nouns anymore. What if you take a noun like “skiing”? Can they read that?

I don’t know if they did clever experiments like that, and you have to deal with homonyms and words that can be both nouns and verbs, and I’m sure that it’s messy and that there’s bleedover.

The interesting point is the larger point that there are specific categories of activity and that you can have injury or disease that robs you of surprisingly specific forms of knowledge. And that point about the surprising degree of specificity applies to neuropsychology in general.

7) Yeah, like vegetables! That’s mind-blowing to me!

Knowledge of persons is localized a little bit differently from knowledge of animals. And knowledge of tools—things that you use—has a specific location too. There are extensive debates about whether these categories are formed based on how things look or based on whether you can manipulate things with your hands or based on other possible properties:

And a cow is both a tool that you can milk for food and a living thing. And a cow is also an advertisement for chocolate in Germany.

But these associations between certain lesions and certain specific deficits are very well-attested in the clinical literature.

8) Did anyone ever have a brain injury and they just lost adverbs? Nothing else, just adverbs? Because that would prove that the brain actually has that category.

One of the mainstays in the literature on aphasia is that patients have a much more difficult time with function words like “the” and “and” and “or”, and a much easier time with content words like nouns and verbs. So it’s possible to have a lesion where you selectively lose the ability to seamlessly process function words.

But I don’t know about a result where someone lost adverbs specifically.

9) Do some people have trouble asking questions after a brain injury? I even heard that one person had a brain injury and lost the ability to use interrogative words.

These things are more thought experiments than well-supported phenomena. Brain injuries are generally messy—they happen because you have a stroke, and vasculature is very messy and complicated.

People have argued that certain patients have had very particular kinds of formal losses related to A-movement or A-bar movement or wh-movement and things like that. Yosef Grodzinsky had very specific cases that he tried to use to make specific claims about grammatical association, but I don’t know how well those claims have held up in light of subsequent studies, and that literature has fallen out of favor—I think it’s interesting literature, but it got a little ideological because people were saying “This patient proves Chomsky!” or “This patient proves Bresnan!”.

10) Could a patient ever have a brain injury and lose Merge or lose constituent structure?

I have colleagues who would disagree with me on this, but in my view structure-building is an elementary operation of the human brain that’s implemented in lots of different places, so in my view it’s not like there’s a “Merge part” of the brain. I can’t imagine an injury that could knock out structure-building.

It seems like Merge is a very basic computational primitive. We haven’t done the right experiments yet to ask whether it’s only performed on lexical items, but it’s probably not just useful for language—it might be useful in vision, motor control, math, and so on.

There’s tentative evidence that brain injury can compromise movement operations, but it’s too early to say, and that’s a bigger deficit than just question-asking.

11) Hypothetically, could we move the needle if we threw ethics out the window and started to make targeted lesions in people’s brains? Obviously everyone knows that that would be highly unethical, but I’ve heard people say that it wouldn’t even be helpful!

No, it would be helpful, since we have enough knowledge at this point that we can formulate pretty specific hypotheses.

We actually do very extensive interventions on patients undergoing epilepsy surgery, and we record from their brains and sometimes stimulate their brains, and I think that’s very interesting.

We already do non-invasive local lesion experiments with transcranial magnetic stimulation—we actually poke local areas of the brain, up- or down-regulate the neural activity in these areas, and see if there are any specific processing consequences. But that’s not the same level of anatomical resolution that we can achieve in experiments with animals.

12) What neurological machinery underlies the separation between these parts of language (e.g., nouns and verbs)?

[Laughs.] If I knew, I’d be rich and famous—or at the very least rich. The speculative answers to this question don’t move me, so I won’t bother you with them.

13) Is it an exaggeration to say that the separation between these parts of language is ultimately encoded into human DNA?

It’s a hypothesis that can eventually be tested—if we’re talking about ultimate reasons, I don’t know what the alternative is.

14) Isn’t that kind of mind-blowing?

In your body, there’s a liver over there and a kidney over there—why should the brain be that different? So it’s not surprising that we see that particular structures are hugely consistent across brains, despite interesting individual variability. For example, the superior temporal gyrus is always organized in a very particular way across people—informally speaking, that’s where phonological operations happen. So clearly the blueprint that builds our brains has some organization written into it.

There’s a remarkable genetic instruction set that builds our brains—language riles people up, but it’s a very simple and important fact that the brain has six layers, and so as the brain forms, the cells have to grow and grow and grow, and then there’s a genetic command that says “You’ve reached your target!” and then the cells turn left and stop growing in the previous direction. How’s that supposed to work?

15) It’s not well understood how cellular differentiation works and how each individual cell knows what it needs to do to implement the blueprint, right?

It’s increasingly well understood that there’s a very rich and dynamic and complicated part of the genome that conditions to an extreme degree how your brain’s six differentiated layers form—each of the six layers has a particular wiring diagram.

16) In the year 3000, could they theoretically use CRISPR to manipulate human DNA so that a person was completely normal in every respect except the person lacked the category of adverbs?

That would be wicked. But then they could actually insert languages like Italian right into the DNA so that these languages would be part of the hardware.

17) Could you just write knowledge of physics and knowledge of quantum physics into the DNA so that that would be part of the hardware too?

That would be the idea.

18) There’s a lot of knowledge written into the brain’s blueprint already, I guess.

I mean, just open your eyes and you see 3D structure—how does that work?

Gallistel’s Project

1) I’m trying to get molecular biologists to pay attention to Randy Gallistel’s project—see my piece with Fredrik Johansson. Do you think that there’s an engram in that cell?

The ferret study from Hesslow lab is clever and cool, and they’ve more or less figured out how to get to the cascade’s beginning part and end part—the data are the data, and I think that the most straightforward and most parsimonious interpretation of the data is that there must be an engram buried in the Purkinje cell.

But I’m not sufficiently knowledgeable to be able to rule out any alternative explanations—for example, some kind of structural change in the cell rather than a polynucleotide-based change.

I really do love Gallistel’s idea that there needs to be some kind of “digital” mechanism to do digital-type storage.

2) Will there be a Nobel Prize if someone finds the engram in there?

In terms of the significance of the finding, I wouldn’t necessarily worry about whether it’s a Nobel Prize or not, as that’s not a particularly great indicator.

But it’s absolutely worthy of a huge amount of attention, and it would be incredibly consequential.

3) Which molecular biologists should I contact?

I’d suggest Tomas Ryan from Trinity College Dublin.

4) What will it take to break through on this?

At the moment it’s an extremely interesting and suggestive experiment, but for the people in molecular biology to be really moved, you need some person—either in a team with Gallistel or not—to test this idea, and once there’s a significant result in a significant journal then people will say: “I want to try it. I want to replicate it. I want to change it.”

People are driven by enlightened self-interest, so this will just be a quirky thing at the edge until an experiment of this form becomes something that people want to do because it’s in the interest of their own lab work.

It’s partly social stuff—Gallistel needs to meet a lot of people and talk to a lot of people. At some point, someone will say: “I think I have an idea of how to do an experiment like this.”

But things are driven by papers and postdocs and results and grants, and just because people think that Gallistel’s idea is really cool doesn’t mean they’re going to say: “Fuck yeah! I’m gonna change my research for a year or five and do that!”

If you’re approaching the engram from both ends of the cascade, you should at least be able to find an initial intermediary stage at some point.

I should actually call Gallistel and chat with him about this—my own hunch is that he should talk to people in computational biology or synthetic biology, since he could ask them: “What would be a simulated experiment or a set of steps that would go in this direction? And once the simulation points to some of these intermediate things, we’ll know what a specific experiment would be.”

It might be the kind of thing that deep learning actually is good for, since it’s about pattern recognition—you’ve got a bunch of things at the input stage and a bunch of things at the output stage, and you’re trying to figure out what’s in the middle. And if you have a good characterization of the input and a hypothesis about the other stage, you can then query all the intermediate layers and see what kind of representations you can come up with.

A simulation might let you get some traction on the problem and might let you make some predictions you could test in a different way or in a different cell, and if you had a very well-worked-out computational model then people might take it more seriously and might want to take a look at some constituent operation or subroutine.

It’s also important to remember that it’s hard for people to get excited about time intervals—my friend Sandeep Prasada has a very short and very clever and very five-star piece in Cognition that extends Gallistel’s argument about formal features to things that we actually care about like apples and cats and dogs:

So Prasada says to Gallistel: “That’s a really good argument, but let’s even expand it to other concepts.”

But remember that you’re dealing with a situation where 99.99% of the people are of the conviction that the problem of memory’s underlying mechanism has been largely solved and that the information is stored in synaptic patterns of connectivity.

I agree completely with Gallistel that the standard story of memory is untenable, but I don’t necessarily agree on what the correct alternative story is because I haven’t worked on the issue very much. And it’s extremely interesting to explore the story with the Purkinje cell no matter what the substrate turns out to be—no matter what the answer is, it’s unbelievably interesting. If the Purkinje cell does this thing, no matter the mechanism it will really be a game-changer.

It might also be important for Gallistel to reach out to people—maybe younger people—and get them to write some opinion pieces and/or think pieces in the literature that molecular biologists read. I just mean pieces that say that the Purkinje cell thing merits a couple of studies. But the message needs to come from young, hip people who have a voice in the field.

5) What do you think about Pietroski’s comment to me that Pietroski has “no better idea” than Gallistel’s “about how biology could implement the instructions” that Pietroski talks about?

Instructions or operations are different from stored primitives—one could imagine that an operation’s terminal representations (the primitives) are stored in multiple different ways, so maybe intracellular storage is only the right answer for certain kinds of pieces of information. Maybe highly frequent processes like concatenation (one part of Merge) are hard-coded into cellular ensembles’ structural features, since it makes sense energetically to have that available all the time and to not have to write it in and out of an intracellular engram all the time.

I don’t see any need to have a one-size-fits-all solution to the engram issue, but you definitely need some kind of story about the engram that explains how long-term representations are stored.

6) What do you think about Pietroski’s further comments to me regarding Gallistel’s project? Pietroski said to me that Pietroski wants to know how “lexical items and the relevant combinatorial operations are biologically implemented”; that crawling comes first and then walking and then running marathons; and that Gallistel’s project might allow us to find out for the first time “how any memories or computational operations are biologically implemented”.

It would indeed be a game-changer to find an engram for any stored variable.

Philosophy

1) Chomsky says that language use is “appropriate to circumstances—typically—but not caused by circumstances, or even elicited by them”. Why can’t I give the exact same quote—that Chomsky gave—about my decision to move my finger?

[Moves finger around in creative way.] You can—that’s the Creative Aspect of Finger Use.

2) [Laughs.] So what is Chomsky referring to then?

He’s not saying that there’s any special mystery about language in this regard—it’s just an anti-behaviorist line, since the behaviorist line was that stimulus–response contingencies cause everything.

I just finished this piece after weeks of taking in small bits of it at a time, exploring the links and ideas you discussed. I've learned so much and have so many more things to read now, thank you!